Manage your S3 lifecycle and storage class

Overview

Amazon Simple Storage Service (Amazon S3) is an object storage service. With S3, you can keep your object with industry-leading scalability, data high availability, security, and performance.

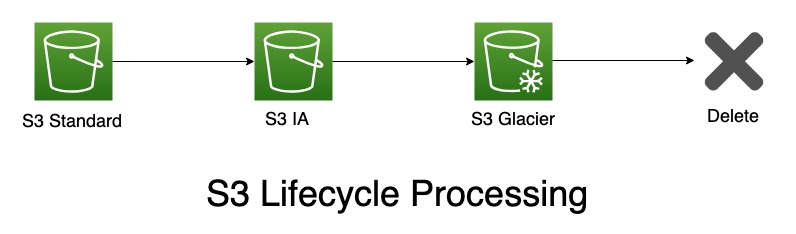

S3 Lifecycle can help you to manage your object easily in cost-effectively way. When you configuring lifecycle, you can define how long you want to transition the data storage class in your bucket and you want to remove it.

Storage Class

There are five storage classes in the S3 that you can configure, which are S3 Standard Storage, S3 Reduced Redundancy Storage(S3 RRS), S3 Infrequently Accessed (S3 IA), S3 Glacier, and S3 Intelligent-Tiering.

-

S3 Standard Storage: The default storage class. This is the most common class you store your frequently accessed data.

-

S3 Reduced Redundancy Storage(S3 RRS): It is designed for frequently accessed noncritical, reproducible data that can be stored with less redundancy than the standard storage class.

We recommend you do not use this storage class because the Standard Storage class is more cost-effective.

-

S3 Infrequently Accessed (S3 IA): S3 IA is the storage class that you can store your long-lived infrequently accessed data. Its storage price is cheaper than S3 Standard, but requires a higher request price to access the data. It can be separated into two classes by its AZ count.

-

S3 Standard Infrequently Accessed (S3 Standard-IA): It stores the object data redundantly across multiple AZ, so it can offer greater availability and resiliency than the One Zone-IA class.

-

S3 One Zone Infrequently Accessed (S3 One Zone-IA): It stores the object data in only one AZ. It is as durable as Standard-IA, but it’s less available and less resilient. (That’s why it has a cheaper storage price than S3 Standard-IA.)

-

-

S3 Glacier: This is suitable for archiving data where data access is infrequent. It offers the same durability and resiliency as the Standard Storage class. It has the cheapest storage price than other storage classes, but it takes the longest time to access data (maybe few hours).

-

S3 Intelligent-Tiering: It is designed to optimize storage costs by automatically moving data to the most cost-effective storage access tier, without performance impact or operational overhead. It has its own lifecycle, from the time the object is uploaded (or moved) to S3 Intelligent-Tiering, as long as you have not accessed that data in thirty days, it will automatically be moved into its IA tier. Conversely, if the data has been accessed within thirty days from the time it was moved to the IA tier, the data will be moved back to the standard tier after that (after moved into its IA tier thirty days).

Transition

With lifecycle, you can set this policy to your bucket (or prefix, tag).

-

From S3 Standard to S3 Standard-IA / S3 Intelligent-Tiering / S3 One Zone-IA / S3 Glacier after a time period (in days).

-

From S3 Standard-IA to S3 Intelligent-Tiering / S3 One Zone-IA / S3 Glacier after a time period (in days).

-

From S3 Intelligent-Tiering to S3 One Zone-IA / S3 Glacier after a time period (in days).

-

From S3 One Zone-IA to S3 Glacier after a time period (in days).

Expiration

With lifecycle, you can also set an Expiration to define how long you want to delete object in your bucket (or prefix, tag).

Scenario

When you use Amazon S3, you may occur this situation:

- If you upload periodic logs to a bucket, your application might need them for a week or a month. After that, you might want to delete them.

-

Some documents are frequently accessed for a limited period of time. After that, they are infrequently accessed. At some point, you might not need real-time access to them, but your organization or regulations might require you to archive them for a specific period. After that, you can delete them.

-

You might upload some types of data to Amazon S3 primarily for archival purposes. For example, you might archive digital media, financial and healthcare records, raw genomic sequence data, long-term database backups, and data that must be retained for regulatory compliance.

Then you can use S3 Lifecycle to meet your needs.

Prerequisites

- Create a bucket in S3.

Step by step

- Go to your bucket’s console, choose Management, you can see your lifecycle here.

Name and scope

- Add new Lifecycle rule, give it a name, if you want your lifecycle just affects the particular object, add its tag or prefix in add a filter to limit the scope to prefix/tags field, otherwise leave it blank.

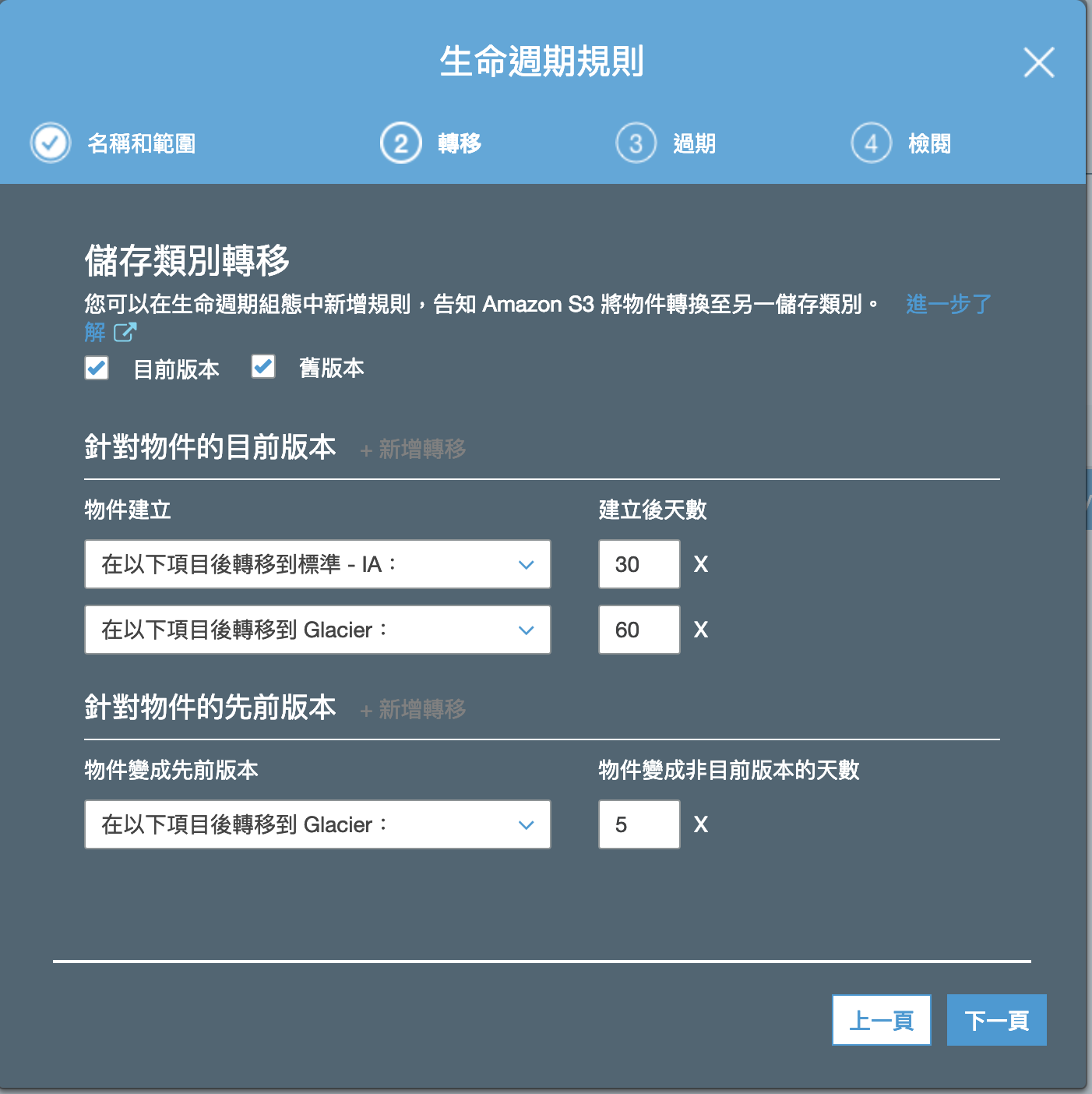

Transitions action element:

- You can give a different lifecycle to Current version object and Previous version object.

You must enable S3 bucket versioning to have versioned bucket. (Previous version has no impact on non-versioned bucket.)

-

The rules for the current version depend on how long after creation, and the rules for the previous version depend on how long after objects becoming noncurrent.

- S3 Standard objects need to take at least 30 days to transfer to Standard IA and One Zone-IA, while S3 IA objects need to take at least 30 days to transfer to Glacier.

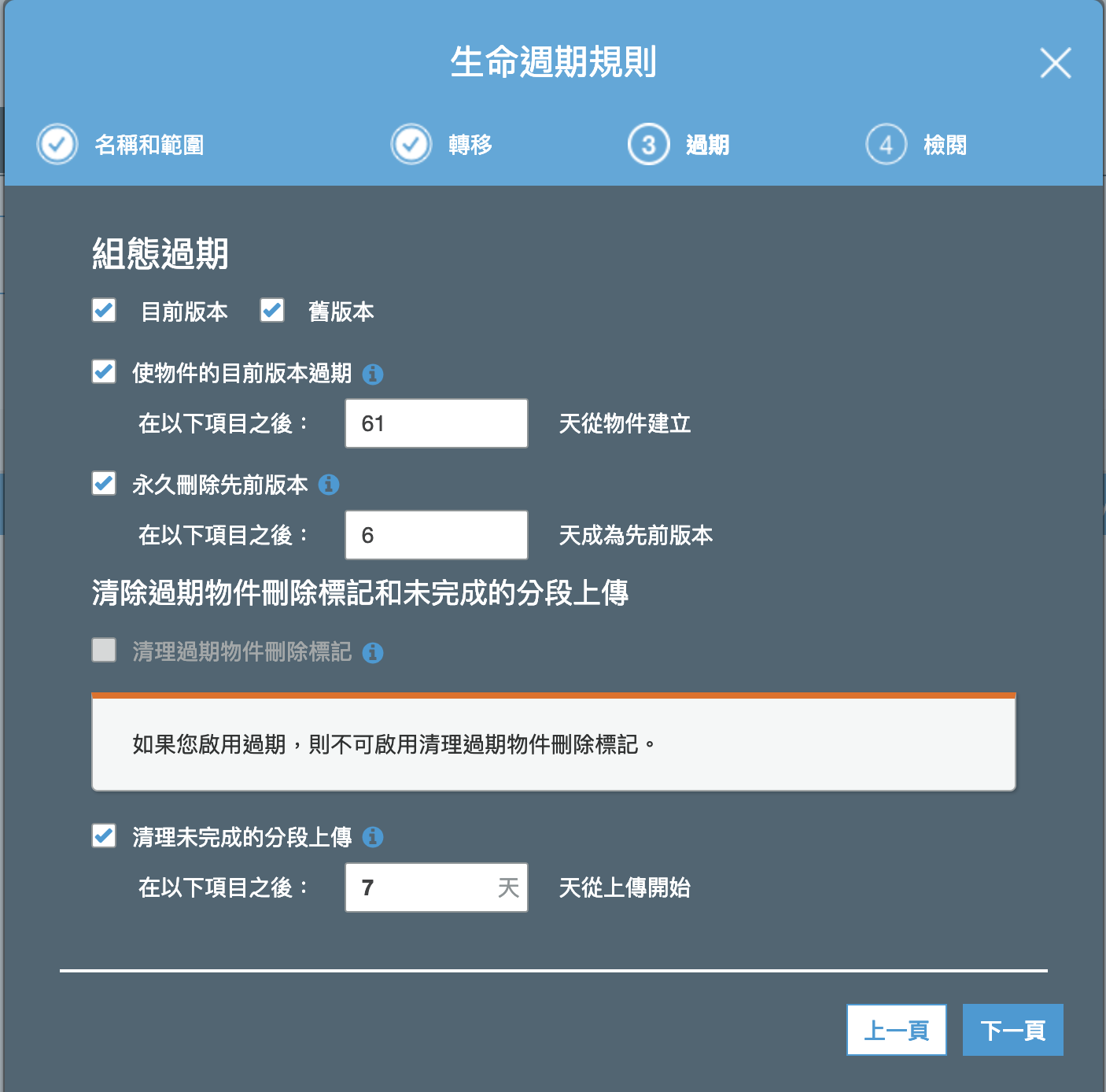

Expiration action element:

-

Expiration action can separate into Current version (Expire) and Previous version (Permanently delete). Both of their action’s time period (in days) should be greater than time period (in days) they completed their last transition.

-

Non-versioned bucket can only apply Expire current version rules. (Permanently delete the previous version has no impact on non-versioned bucket.)

Expire current version

-

For non-versioned bucket: The Expiration action results in Amazon S3 permanently removing the object.

-

For versioning-enabled bucket:

- If the current object version is not a delete marker, Amazon S3 adds a delete marker with a unique version ID. This makes the current version noncurrent, and the delete marker the current version.

- Amazon S3 doesn’t take any action if there are two or more object versions and the delete marker is the current version.

- If the current object version is the only object version and it is also a delete marker, Amazon S3 removes the expired object delete marker.

-

For versioning-suspended bucket: In a versioning-suspended bucket, the expiration action causes Amazon S3 to create a delete marker with null as the version ID. This delete marker replaces any object version with a null version ID in the version hierarchy, which effectively deletes the object.

Permanently delete previous version

- Use this action to specify how long (from the time the objects became noncurrent) you want to retain noncurrent object versions before Amazon S3 permanently removes them. The deleted object can’t be recovered.

Other configuration

In addition to the transition and expiration actions, you can use the following lifecycle configuration action to direct Amazon S3 to abort incomplete multipart uploads and clean delete marker.

You can’t specify this lifecycle action in a rule that specifies a filter based on object tags.

- Clean up expired object delete markers: In a versioning-enabled bucket, a delete marker with zero noncurrent versions is referred to as the expired object delete marker. You can use this lifecycle action to direct S3 to remove the expired object delete markers that helps you to improve the performance of LIST operation. >You can’t enable this if you enable Expire current version because it already contains this action.

-

Clean up incomplete multipart uploads: Use this element to set a maximum time (in days) that you want to allow multipart uploads to remain in progress. If the applicable multipart uploads are not successfully completed within the predefined time period, Amazon S3 aborts the incomplete multipart uploads.

Test

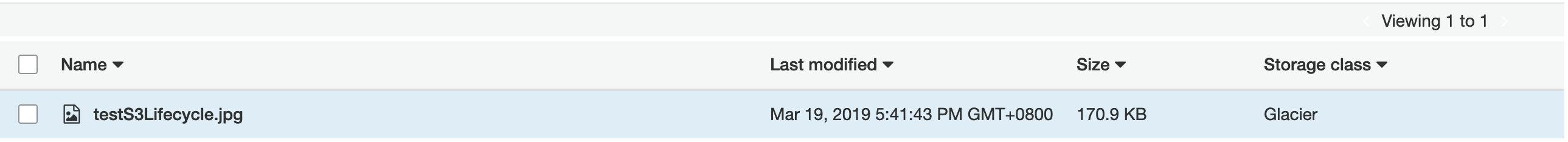

After the time period you set, you can see that the object has been moved to another class just like you want.

In my case, to be able to show results in the short term, I set transition to Glacier after just one day:

Here is the result after Mar 20 (I have not modified the file since Mar 19.), you can see the Storage class is Glacier now:

Conclusion

The above content is how to set S3 lifecycle. As we said at the beginning, lifecycle helps you to manage your objects automatically after setting rules, saving your costs and avoiding manual errors.